Why and How MQTT is Used in AI/LLM Applications: Architecture and Use Cases

Table of Contents

Introduction

The rapid growth of the Internet of Things (IoT) and the advent of AI, especially Large Language Models (LLMs), have opened new possibilities for intelligent, connected systems. However, harnessing real-world IoT data to drive AI applications requires efficient, real-time communication. Traditional request/response methods often fall short in these dynamic environments.

MQTT (Message Queuing Telemetry Transport) has emerged as a lightweight, publish/subscribe messaging protocol ideally suited to bridge IoT devices with AI/ML services. It enables event-driven data flow, allowing AI systems to ingest and act on fresh sensor data continuously rather than only on demand. As one AWS blog notes,

“the generative AI must be continuously fed with fresh, new data to move beyond its initial, predetermined knowledge and adapt to future, unseen parameters. This is where the IoT becomes pivotal in unlocking generative AI’s full potential”.

MQTT’s efficiency and scalability make it a go-to standard for IoT communication, providing the real-time, reliable data pipeline that AI applications need.

This series will provide a deep dive into how and why MQTT is used in AI applications and LLM inference, structured to inform both top management and engineering teams. This first part explores the fundamentals of MQTT, its integration with AI, the technical architecture, and real-world use cases across various industries. The aim is to be beginner-friendly yet technically in-depth – offering insight into MQTT’s role in enabling intelligent, distributed systems.

MQTT and AI Integration Overview

MQTT at a Glance

MQTT is a publish/subscribe messaging protocol designed for resource-constrained devices and unreliable networks. Unlike HTTP’s one-way request-response pattern, MQTT uses a central broker to facilitate bi-directional communication between publishers (data senders) and subscribers (data receivers) on named topics. Once devices establish a connection, the broker can route any number of messages in both directions without the overhead of repeated handshakes. This architecture decouples senders and receivers – a perfect fit for distributed IoT and AI systems where many sensors, devices, and services must communicate in real time. MQTT’s lightweight design (minimal packet overhead, binary payloads, small header) minimizes network load and features like Quality of Service (QoS) levels, retained messages, and last-will testament add reliability in adverse network conditions.

“MQTT is a lightweight messaging protocol based on publish/subscribe model, specifically designed for IoT applications in low bandwidth and unstable network environments. It can provide real-time reliable messaging services for network-connected devices with minimal code. MQTT protocol is widely used in IoT, Mobile Internet, Smart Hardware, Internet of Vehicles, Smart Cities, Telemedicine, Power, Oil, Energy, and other fields.” -What Is MQTT and Why Is It the Best Protocol for IoT?

Why MQTT for AI and LLM Applications

Combining MQTT with AI/LLM inference brings together the best of both worlds — IoT devices generate live data streams, while AI provides intelligence to analyze and act on that data. MQTT’s event-driven model ensures immediate data exchange, allowing AI to make real-time decisions without delays. For example, an LLM-powered analytics service can subscribe to machine sensor topics and immediately receive updates when a reading goes out of range, triggering an AI-based anomaly detection model. Unlike HTTP polling, which requires frequent requests for updates, MQTT’s push-based approach delivers data as soon as it becomes available, significantly reducing latency and network congestion.

MQTT has become the preferred protocol for scalable IoT communication because it reliably transmits data from thousands of sources to consumers (like AI systems) even in low-bandwidth environments.

One of MQTT’s biggest advantages is that it decouples data producers from consumers, allowing:

- AI microservices to be added or updated independently, without impacting other systems.

- Device firmware to remain unchanged, as long as the AI knows which topics to subscribe to.

- Scalable AI workloads and adaptive prompt engineering strategies, making AI applications more flexible and future-proof.

In short, MQTT acts as the nervous system for AI-driven ecosystems, enabling real-time data flow so AI “brains” can operate efficiently in distributed environments.

Technical Architecture and Mechanisms

To understand how MQTT supports AI inference, let’s examine the architecture and data flow in a typical deployment:

Pub/Sub Data Pipeline

IoT devices are programmed as MQTT publishers. Each device publishes messages (e.g. sensor readings, status updates) to specific topic channels (strings resembling paths, like factory/line1/temperature). On the other side, AI services or LLM inference engines act as subscribers, registering their interest in relevant topics (they may use wildcards like factory/+/temperature to catch all lines). The MQTT broker sits in the middle, responsible for routing messages from publishers to all subscribers of the matching topics. This happens in real-time and in a decoupled fashion – devices don’t know which services are consuming their data, and services don’t directly query devices. The broker can handle thousands or millions of topics and clients concurrently, making the system highly scalable. Importantly, MQTT connections are long-lived over TCP, so once an edge device or AI service is connected, data flows with minimal handshake overhead, unlike HTTP which would incur headers and re-authentication on each request.

Event-Driven AI Inference

In this architecture, AI applications become event-driven. For example, consider an AI-powered monitoring system in a factory: sensors publish telemetry continuously, and an AI-based analytics engine subscribes to those feeds. When a sensor value indicates an anomaly, the AI engine immediately receives that event and can run inference (e.g. generate a natural language alert or a decision to adjust a setting). The response or decision can be published back via MQTT – perhaps on a factory/alerts topic – which could be picked up by a dashboard or an actuator controller. This end-to-end flow happens within milliseconds to seconds, enabling real-time responsiveness. It contrasts with polling systems where the AI might only find out about an issue on the next poll cycle (which could be seconds or minutes later). The asynchronous, non-blocking nature of MQTT means many events and AI inferences can be in flight simultaneously, maximizing throughput and utilization of the AI service.

Microservices and LLM Inference Pipelines

MQTT is commonly used as the messaging backbone in microservice architectures. An LLM inference workflow can be broken into multiple components – e.g. one service collects or aggregates IoT data, another service hosts or calls the LLM, and another handles results – all communicating via MQTT topics. This decoupling allows each component (data collection, inference, post-processing) to scale or be updated independently.

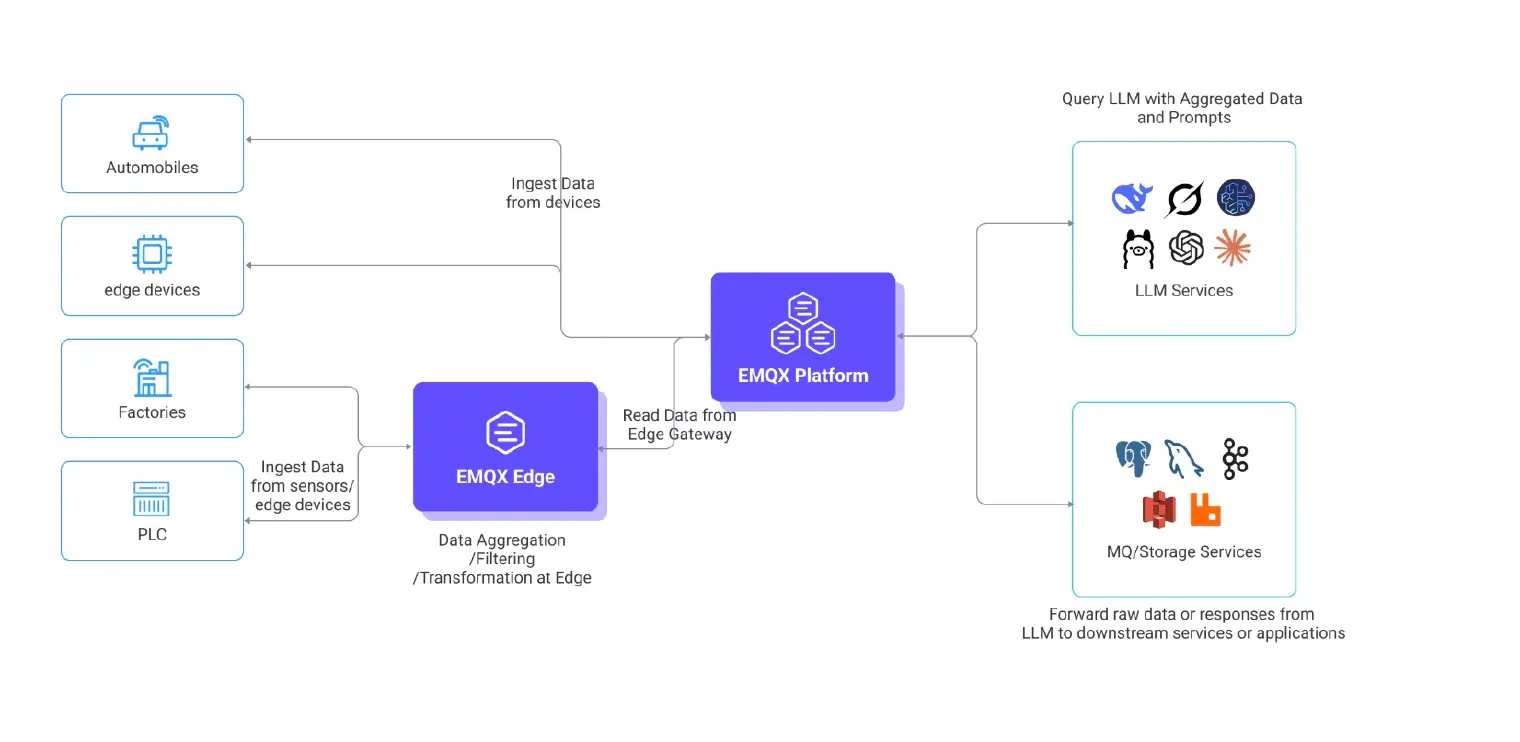

LLM + MQTT Architecture Diagram

The following diagram illustrates how an IoT system can integrate with LLM services across both edge and cloud. This setup applies to diverse IoT and industrial contexts—such as factories, automobiles, and other edge environments—where sensor data needs real-time analysis or decision-making by a large language model.

Data Ingestion and Edge Processing

Direct-to-Cloud Devices

In many IoT scenarios (e.g., connected vehicles or standalone sensors), end devices already include MQTT clients. They can establish a secure MQTT connection directly to the cloud-based MQTT broker and publish telemetry or status data in real-time.

Factory/Industrial Edge Gateway

In smart manufacturing environments, a large number of sensors, PLCs, and equipment typically communicate with a local edge gateway (e.g., EMQX Edge. The gateway aggregates, cleans, filters, and transforms the raw data before sending it onward. Typical examples include:

- Data Aggregation: Combining multiple sensor readings into a higher-level metric (e.g., the average temperature of a production line).

- Filtering & Cleansing: Removing erroneous or out-of-range readings so they do not pollute downstream analytics.

- Protocol Translation: Converting from legacy industrial protocols (Modbus, OPC UA, etc.) into MQTT for more seamless communication with the cloud.

Once processed, the gateway publishes these refined data streams to the central MQTT broker. By offloading some processing to the edge, network overhead is reduced and local actions can be taken even when cloud connectivity is limited.

Cloud MQTT Broker and LLM Interaction

Broker as Central Hub

Whether data arrives directly from devices or via an edge gateway, all messages end up at the cloud MQTT broker (e.g., EMQX Platform). The broker then routes incoming data based on topic subscriptions to any number of consumers—including LLM services and downstream storage or queue systems.

LLM Prompting and Inference

When deeper analysis or natural-language interactions are needed, a dedicated application subscribes to relevant MQTT topics or receives incoming data and pushes the data to an LLM service (such as a hosted GPT model or an on-premise large language model). That dedicated application:

- Constructs Prompts: Combines live sensor data, historical context, or user queries to build an LLM prompt.

- Calls the LLM API: Sends the prompt to the LLM and waits for the inference result.

- Publishes the Result: Once the LLM responds (e.g., with a natural-language insight or a recommended action), the result is published back via MQTT to the broker.

Results Delivery and Downstream Integration

- Returning to Edge or End Devices: If the LLM’s output includes commands, alerts, or other actionable intelligence, the MQTT broker forwards these messages back to the subscribing edge gateway or end devices. For instance, a factory PLC might receive a real-time adjustment command when the LLM detects an anomaly in sensor data.

- Storage and Message Queues: Alongside returning intelligence to the edge, the cloud MQTT broker can also push data or LLM responses to additional systems:

- Databases: Persist original sensor data, processed results, or LLM output in storage systems (e.g., PostgreSQL, MySQL, or S3) for later analysis and reporting.

- Other MQ Systems: Forward messages to Kafka, RabbitMQ, or other event-stream platforms for broader data processing, enterprise integration, or archiving.

Architectural Benefits

- Real-Time Responsiveness: MQTT’s publish/subscribe pattern ensures low-latency, event-driven data flow from the edge to LLM services and back.

- Flexible Edge–Cloud Collaboration: Edge gateways can handle preliminary data aggregation and filtering to reduce cloud overhead and support local decision-making.

- Decoupled, Scalable Design: New AI/LLM modules can be added without altering device firmware. The MQTT broker acts as a routing layer for all publishers and subscribers.

- Multi-Destination Data: One data stream can serve multiple consumers (analytics, dashboards, AI) thanks to MQTT’s topic-based distribution.

- Seamless Extension: Data and LLM outputs can also be forwarded to other storage solutions or message queues, ensuring a wide range of integration possibilities.

In short, MQTT enables a plug-and-play architecture for AI: sensor data (potentially processed at the edge) is published to an MQTT broker, which collaborates with an LLM service to generate insights or commands, and ultimately delivers those results wherever they are needed—whether that’s back to the edge, into a database, or to a downstream application. This architecture is inherently cloud-friendly (MQTT brokers can run on-premise or in the cloud), and also edge-friendly – brokers can be deployed at the edge or even on vehicles to enable local AI inference without cloud latency. Finally, MQTT’s support for retained messages means an AI service that (re)connects can immediately get the last known value of a sensor without waiting for the next update – ensuring the AI has stateful context from the startup.

AI and LLM Inference Use Cases with MQTT

MQTT’s versatility has made it a preferred choice for integrating IoT data with AI-powered intelligence across multiple industries. Below, we explore real-world use cases that highlight how MQTT and LLM/AI inference work together to enable real-time decision-making and automation.

Software-Defined Vehicles (Connected Cars)

Modern vehicles are often referred to as “data centers on wheels” - due to the vast amounts of telemetry they generate - including engine performance, driver behavior, and sensor feeds from lidar and cameras. Increasingly, these vehicles also rely on cloud-based updates and commands to enhance functionality and safety.

MQTT is widely used in connected car platforms as a messaging layer between vehicles and cloud services. It enables bidirectional communication, allowing vehicles to publish telemetry data (e.g., GPS, diagnostics, infotainment usage) and subscribe to incoming messages (e.g., remote commands, software updates, or even AI model parameters).

As software-defined vehicles evolve, they require a unified connectivity backbone that allows them to:

“Send telemetry data and receive updates, commands, and AI/ML models from the cloud” Software-Defined Vehicles Solution

For example, an autonomous or semi-autonomous car might publish sensor data to an MQTT broker, where a cloud-based AI model (such as an LLM-based voice assistant or an AI-driven driving policy system) analyzes the data. Based on the AI inference, MQTT can instantly send commands back to the vehicle, such as steering adjustments, driver alerts, or navigation optimizations.

Industrial IoT & Smart Manufacturing

Factories today are highly instrumented with sensors and machines continuously generating real-time telemetry—including temperature, pressure, and vibration readings. MQTT (often extended with Sparkplug B for industrial data) enables this live production data to be streamed to AI-powered monitoring and analytics systems. Key use cases include:

- Predictive Maintenance:

- Vibration and temperature sensors publish readings to an MQTT broker. AI models analyze trends and predict machine failures before they happen.

- Outcome: Proactive maintenance reduces downtime and repair costs.

- AI-Driven Quality Control:

- Computer vision systems on production lines publish inspection results via MQTT. AI algorithms detect defects and automatically trigger adjustments or alerts.

- Outcome: Real-time decision making improves product quality and efficiency.

By integrating MQTT and AI, manufacturers close the loop between data collection, analysis, and action, creating fully automated, intelligent production environments.

Smart Homes and Consumer IoT

MQTT is a foundational technology for smart home automation, acting as a communication layer for IoT hubs that connect sensors, actuators, and AI-driven assistants.

The addition of LLMs and AI makes smart homes far more intuitive and autonomous. For example, voice assistants powered by ChatGPT-based assistants can control home devices through MQTT. A user might say:

“Turn off the living room lights and set the thermostat to 72°F”

The voice assistant transcribes the request, an LLM interprets the intent, and then publishes an MQTT message that is received by the home automation system, which executes the command.

An EMQ demonstration showcased how natural language processing (NPL) + MQTT can control home devices (e.g., switches, lights, brightness settings) via ChatGPT-powered commands. This approach enhances user comfort and accessibility.

Beyond voice control, AI can introduce intelligent automation, such as:

- Learning household routines and publishing MQTT messages to adjust heating or lighting accordingly.

- Summarizing daily energy consumption via an LLM, helping users optimize energy use.

Just recently, Amazon introduced Alexa+, leveraging advanced LLM models to create more natural, context-aware interactions in smart homes. Similarly, AI platforms like Anthropic’s Claude are emerging as new integrations, reinforcing MQTT’s role in powering the next generation of voice-controlled home automation.

MQTT remains a preferred protocol in home automation due to its simplicity, low latency, and ability to push instant updates allowing devices to instantly report state changes and trigger automated routines.

Other Domains: Energy, Healthcare, and Smart Cities

Beyond the above, MQTT+AI use cases span numerous fields.

- Energy Management: Smart meters and grid sensors publish real-time power usage data via MQTT. AI models analyze demand fluctuations to optimize grid loads and predict outages.

- Healthcare & Remote Patient Monitoring: Wearables and health monitors stream patient vitals (e.g., heart rate, oxygen levels) to an AI diagnostic system via MQTT. AI detects anomalies (e.g., arrhythmia) in near real-time, alerting doctors or emergency responders when necessary

- Smart Cities & Urban Infrastructure: Traffic sensors, air quality monitors, and environmental sensors publish city-wide data via MQTT. AI models analyze trends and optimize traffic light timings, pollution control, and public safety measures

Cloud Integration: MQTT as the AI Data Ingestion Layer

Major cloud providers integrate MQTT natively into AI and data processing services:

- AWS IoT Core (MQTT-based) feeds into AWS AI services.

- Azure IoT Hub connects MQTT-enabled IoT devices to AI-driven analytics and decision-making models.

By acting as the real-time data pipeline for AI systems, MQTT ensures scalable, event-driven intelligence across industries.

Final Thoughts

MQTT’s publish/subscribe model and IoT-centric design have made it a cornerstone in AI-driven automation. From smart factories to connected vehicles and smart homes, MQTT enables real-time AI decision-making by seamlessly feeding live IoT data to AI models and disseminating AI-generated insights back to devices and users.

Stay Tuned for Next Piece

In this blog, we’ve laid the groundwork for understanding how MQTT serves as a critical bridge between IoT devices and AI/LLM applications. We explored its event-driven architecture, examined its real-world impact across industries like automotive, manufacturing, and smart homes, and highlighted how it enables real-time, AI-powered decision-making.

However, understanding the technology is just the beginning—effective deployment is where the real magic happens.

In our next blog post, we’ll take a hands-on approach to integrating MQTT into AI-driven projects. Expect practical insights on key topics like security, scalability, and performance optimization, alongside with a comparison of MQTT against other communication protocols to help you make the right choices for your AI architecture.

We’ll also address common challenges and explore emerging trends in MQTT’s role within AI innovation.

Stay tuned for our next piece and unlock the full potential of MQTT in shaping the future of AI!